Core Concepts

Components and Interactions

EdgeChains consists of several key components that work together to provide its functionality:

Language Chain: Language Chains in EdgeChains refer to the specific chains that handle language-related tasks. These chains are responsible for generating and understanding natural language prompts, performing language-based computations, and interacting with the underlying LLM. Language Chains utilize the capabilities of the OpenAI GPT model and provide an interface for developers to work with natural language inputs effectively.

Reasoning+Acting (ReAct) Chains: ReAct combines language processing and computation logic to enable reasoning and acting capabilities for complex tasks. It allows language models to generate verbal reasoning traces and text actions in an interleaved manner. While actions receive feedback from the external environment, reasoning traces update the model's internal state by reasoning over the context and incorporating useful information for future reasoning and acting.

OpenAI Client: The interface for communication with OpenAI's GPT or other LLM models.

Redis Service: Provides a fault-tolerant and scalable data storage solution for managing context and state within the EdgeChains system.

Workflow and Data Flow

The workflow and data flow in EdgeChains follow a well-defined process:

Input: Language prompts or queries are provided to the Language Chain as input.

Language Chain Processing: The Language Chain processes the input by leveraging the LLM and other language-related components to generate relevant language-based outputs.

ReAct Chain Integration: If necessary, the Language Chain output may be passed to the ReAct Chain for further reasoning and acting capabilities.

Connectors Interaction: Connectors are used to interact with external services, APIs, or databases as needed. These connectors facilitate data retrieval, storage, or other operations required by the application.

Output: The final output, which could be a language-based response, an action execution, or any other desired result, is generated by the Chain and presented to the user or further processed by downstream components.

Schematic for Quick Overview

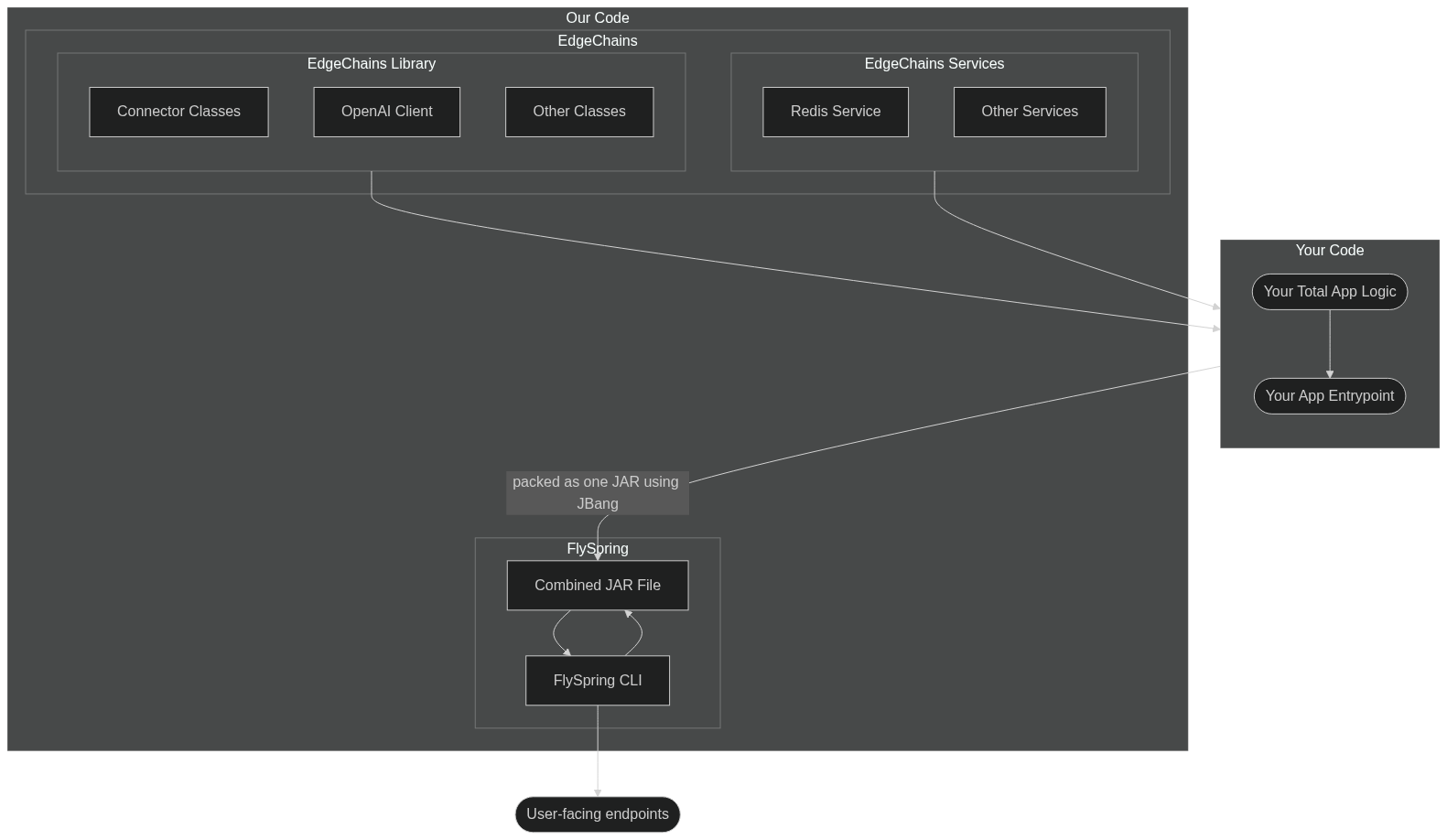

The diagram illustrates the architecture of an application that utilizes EdgeChains, FlySpring, and your code. Here's a breakdown of the components and their relationships:

In this architecture, your code contains the application's logic and serves as the entry point, connecting with the total app logic. The EdgeChains Library provides the connector classes to facilitate communication with external services, an OpenAI client for interacting with OpenAI's services, and other classes that support EdgeChains functionality. Additionally, EdgeChains Services make use of a Redis service and include other services to enhance the functionality of EdgeChains. FlySpring, on the other hand, consists of a combined JAR file that includes FlySpring CLI.

In your codebase, the combination of FlySpring and EdgeChains is represented as the overall code, where your code is packed as a single JAR file using JBang and connected with FlySpring. The EdgeChains Library and EdgeChains Services are also connected to your code. Lastly, the user-facing endpoints interact with FlySpring. It is recommended for each user to keep a separate file for the main() function, which serves as the entry point of the application.